Getting your website’s public-facing pages crawled and indexed by search engines is the most fundamental step in optimizing a website for greater visibility. In the past, achieving a good level of indexing was mostly a function of good internal linking, crawl budget management and inbound link acquisition as earlier search engines could only rely on links to lead them from webpage to webpage. In recent years, that limitation has mostly been eliminated as most websites have adopted a universal standard of keeping accurate inventories of their content libraries: the XML sitemap.

What is an XML Sitemap?

As the name implies, a XML sitemap is a document coded in the XML format whose function is to list all the URLs in a website that are available for crawling and indexing. It not only provides bots with a full list of all the public-facing pages in a website, it also gives search engines a better idea of the website’s information architecture, the hierarchy of its pages and the frequency of updates that happen on the website.

Sitemaps can be written manually or they can be generated using a variety of tools. These documents may also be static or dynamic. The former meaning that the document does not change regardless of what happens within the website, while the latter means that URLs are added and removed on the sitemap automatically when pages are created or deleted within the CMS. In modern SEO, automatically generated and dynamic XML sitemaps are often the preferred setup.

What are the benefits of having a XML Sitemap?

Setting up a XML sitemap and submitting it to search engines is one of the most important steps you can take to ensure that your website is properly indexed. When done correctly, you will likely experience the following benefits:

• Better Index Coverage. Barring any errors in your sitemap and violations of Google’s guidelines, having your URLs submitted in your XML sitemap virtually guarantees that they will get crawled at some point and considered for inclusion in Google’s index. This is true even when the page is an “orphan” or has no internal links pointing to it.

• Better Crawl Prioritization. If the XML sitemap is written in such a way that URLs are arranged in a “cascading” manner from the home page to category pages, down to subcategories, then individual product or content pages, there’s a good chance that search engine algorithms will be able to figure out that this arrangement is based on the importance of each URL in your information architecture.

Search engines will then be more likely to assign a greater ranking weight to URLs that are higher in your website’s content hierarchy than those that are further down the ladder.

• Diagnostic Reports. Some search engines have free services that grant you diagnostic reports on your website’s search visibility. Google Search Console is far and away the most widely used platform of this kind. Submitting your XML sitemap to GSC not only allows Google to find your sitemap more easily, this also allows the platform to start displaying detailed reports on your sitemap which allows you to determine which URLs have been indexed, which ones have errors and which ones are triggering warnings from the search engine.

How to Create a XML Sitemap

There are a few ways to add a sitemap to your website. The main ones include:

• Using a CMS Feature or a Plugin. Some modern CMS platforms have SEO feature suites that include sitemap generators. In the absence of such a feature, plugins may provide the desired solution. WordPress, for instance, has the Yoast SEO plugin which comes with one of the industry’s best XML sitemap generators.

• Using an Online Generator. Some free online tools exist solely to help you generate XML sitemaps like our own XML Sitemap Generator. Just keep in mind that these tools don’t always generate the most complete sitemaps due to the fact that they’re bot-driven. If a page doesn’t have any internal links pointing to it, the generator will likely miss it.

• Hand-Coding It. If for whatever reason you don’t want to use automated means to generate your sitemap, it’s certainly possible to hand-code it if you know enough about writing XML documents. Just make sure to follow Google’s guidelines here for best results. We don’t recommend going this route but there may be some exceptions to that general advice.

How to Help Search Engines Find Your Sitemap

In order for search engines to make use of your sitemap, they have to find it first. Sitemaps are typically not linked to from within the site’s pages and their file locations can vary depending on where you upload them and how you name them. As such, it’s best to do one of these two things to make sure the search engines find and crawl the document:

• Submit It to Google Search Console [Search Console for beginners article] or Its Counterparts. The easiest and most effective way to make sure that search engines find your sitemap is by submitting it to their diagnostic service platforms such as Search Console for Google or Webmaster Tools for Bing. Other than helping search engines find your sitemap, these platforms will also give you insights on the correctness of the document’s formatting as well as diagnostics on the URLs listed in it.

• Write Its URL in the Robots.txt File. You can make search engines aware of your XML sitemap’s location by listing it in your robots.txt file. Simply add a line that states “Sitemap: http://www.example.com.ph/sitemap.xml” and you’re all set.

How to Validate Your XML Sitemap

While having a XML sitemap in your website is a good thing, simply having it up and running doesn’t necessarily mean it’s optimized to deliver the best SEO results possible. To ensure that you get the most out of your sitemap, execute this process at least once every 3 months:

1. Remove Unnecessary URLs. It’s pretty common to have live pages on your website that you’d rather not have indexed due to several reasons such as content duplication, thin content, inclusion in a paginated series of pages, etc. Examples include blog tag archives, date archives, product SKU variant pages and more.

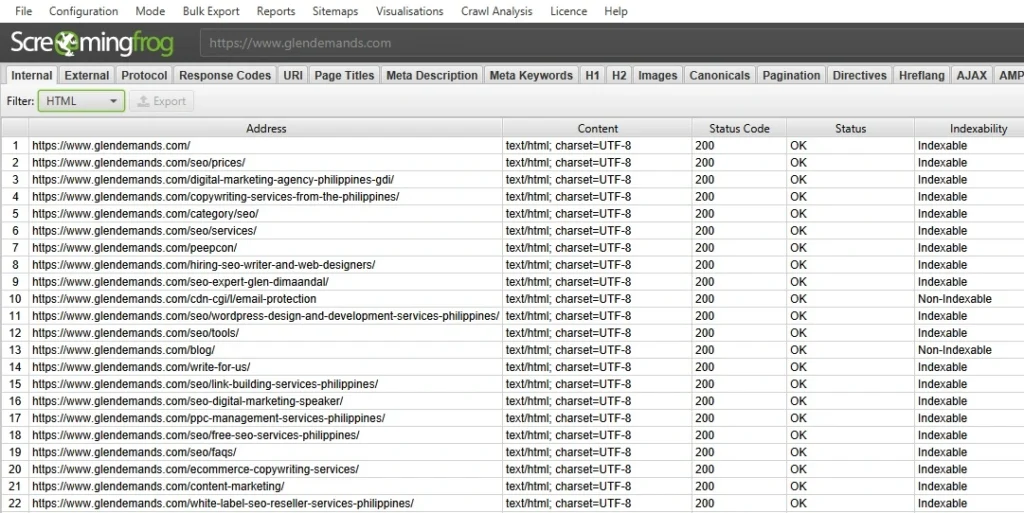

2. Crawl the XML Sitemap with a Tool. You can export the URLs in your XML sitemap to a spreadsheet by hitting CTRL+S while your browser has it open. You can then open the file using Microsoft Excel and upload the URLs to tools like Screaming Frog or DeepCrawl to get diagnostic information on the status of each webpage listed.

Check the Status codes of each URL and watch out for the following values:

– 3xx Redirects. These server responses mean that the URL has been redirected. If this is the case, it’s best to take the URL off the sitemap and list the URL it’s pointing to instead.

– 4xx Errors. This group of server responses signifies that the webpage either does not exist or is experiencing technical issues. If the status code is a result of deliberate deletion and is permanent, remove the URL from the sitemap.

– 5xx Forbidden. Some pages will require login authentication to access. Since search engine bots aren’t capable of logging in, they will simply be given a Forbidden error message. If any such pages are listed in your sitemap, remove them.

3. Take Note of Meta Robots Tags. In relation to the first point, some pages in your XML sitemap may be non-indexable due to special HTML tags that affect indexing behavior. If you see any of these tags reported by your crawling tool of choice, confirm whether the tag placement is legitimate or not. If the tags were put into the pages for valid reasons, remove the URL from the sitemap:

– Noindex

– Rel=canonical pointing to other page.

– Rel=prev and rel=next pointing to other pages. Additionally, if a URL is restricted from indexing by the robots.txt file for valid reasons, take it off the sitemap.

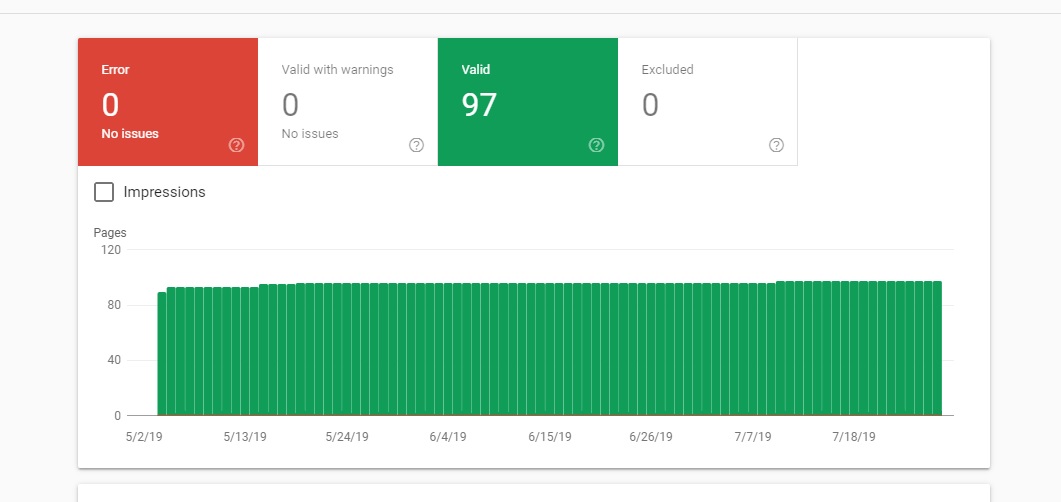

4. Monitor Search Console Reports. As mentioned previously, the current version of Search Console has a handy Sitemaps report that gives you lots of useful information about your sitemap and the pages listed in it. General reports include:

– Errors. These include 404 Not Found and server error response codes that Google detected in URLs listed in your sitemap.

– Valid with warnings. This report lists URLs that have issues which do not necessarily prevent their indexation but will stunt their search visibility. The most common warning is that or URLs that are indexed despite being blocked by robots.txt. If the URL is indeed intentionally being kept off the search index, take it off the XML sitemap and add a noindex tag to take it off the SERPs.

– Valid. As the name suggests, these are URLs that are being indexed by Google without any issues. They’re classified into two types, specifically:

• Submitted and Indexed. These are URLs listed in the sitemap that are being indexed correctly.

• Indexed, Not Submitted. These are URLs not found on the XML sitemap but are indexed nonetheless. You’ll want to review these to make sure that you really want them on the SERPs. If they’re thin, duplicate or low-quality pages, it may be better to block them from indexing using either the robots.txt file or the noindex tag, whichever you deem more appropriate.

– Excluded. These are the URLs that have been kept off Google’s index for reasons that Google perceives to be correct. These URLs may have been kept off the index by virtue of the webmaster’s instructions via robots.txt, meta robots tags, and other mechanisms or they can be URLs that Google doesn’t deem fit for indexing such as thin or duplicate pages.

We recommend checking your Search Console at least once every quarter so you can stay on top of what’s happening in your XML sitemap. Far too often, changes in the website can trigger unintended scenarios which can affect your search visibility negatively. Taking a glance at your Sitemaps report on search Console allows you to quickly spot issues that you need to address.

In Summary

XML sitemaps are important components of every successful SEO campaign. They’re easy to put together and optimizing them is mostly a matter of diligence in checking the data and making logical decisions. Maintaining a properly functioning sitemap also isn’t too difficult. As long as you have a good sitemap generator and you practice sound content management practices, you should be alright.